Logistic Regression

Tags: Classification, Logistic, Supervised, Week2

Categories: IBM Machine Learning

Updated:

Logistic Regression

Sigmoid function

Apply Sigmoid function to the regression.

And it convert into a odds ratio.

and

from sklearn.linear_model import LogisticRegression

LR = LogisticRegression(penalty='l2', C=1e5)

LR = LR.fit(x_train, y_train)

y_predict = LR.predict(X_test)

LR.coef_

Confusion Matrix

| Predicted True | Predicted False | |

|---|---|---|

| Actual True | TP | FN |

| Actual False | FP | TN |

FN is called type 1 error, and FP is called type 2 error.

Accuracy is the ratio of correct predictions to all predictions.

Sensitivity, Recall Correctly predict the positive class. What percentage is captured true

Precision is, out of all positive predictions, how many are correct. Trade off between recall and precision.

Specificity is how correctly predicted the negative class. Recall for class 0.

F1 is the harmonic mean of precision and recall. It captures trade off between recall and precision.

Classification Error Metrics

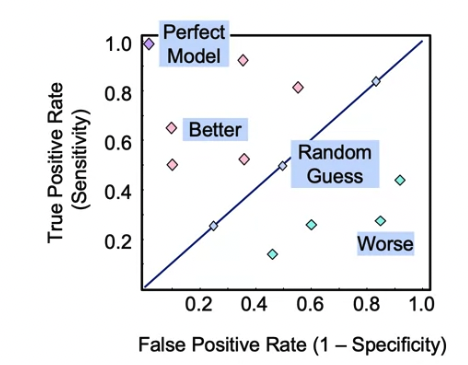

ROC

Receiver Operating Characteristics is a scatter Plot of True

Positive Rate (TPR, Sensitivity) and False

Positive Rate (FPR 1-Specificity).

Better when data with balanced classes.

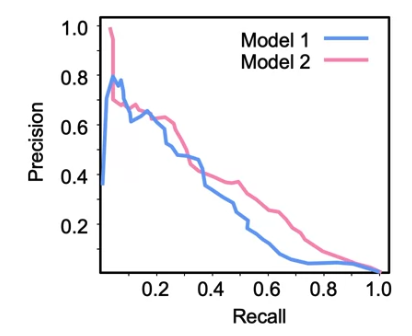

Precision-Recall Curves

Trade off between precision and recall.

Better for data with imbalanced classes.

Multiple Class Error Metrics

Accuracy $= \frac{TP1+TP2+TP3}{Total}$

Code

from sklearn.metrics import accuracy_score

accuracy_value = accuracy_score(y_test, y_predict)

from sklearn.metrics import precision_score, recall_score, f1_score, roc_auc_score, confusion_matrix, roc_curve, precision_recall_curve

Leave a comment